Unlike photographic images, however, visual objects in motion typically appear relatively sharp and clear (e.g., Bex, Edgar, & Smith, 1995; Burr & Morgan, 1997; Farrell, Pavel, & Sperling, 1990; Hammett, 1997; Hogben & Di Lollo, 1985; Ramachandran, Rao, & Vidyasagar, 1974; Westerink & Teunissen, 1995).

Our research suggests that the extent of motion blur is controlled by visual masking mechanisms in retinotopic representations.

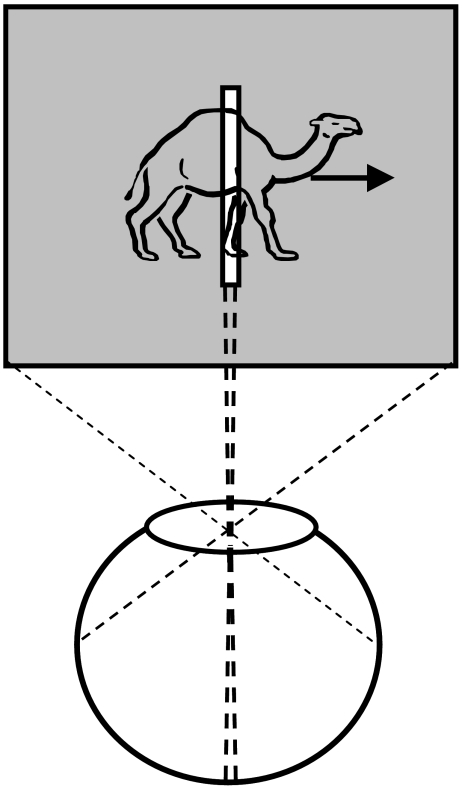

However, masking mechanisms solve only partly the motion blur problem. If we consider the example shown in the picture above, masking mechanisms would make the motion streaks appear shorter thereby reducing the amount of blur in the picture. Yet, although deblurred, moving objects would still suffer from having a ghost-like appearance. For example, notice the appearances of targets moving fast, those that are moving more slowly, and the stationary objects. Rapidly moving objects have a ghost-like appearance without any significant form information while slowly moving objects have a more developed form, and finally, static objects possess the clearest form. This is because static objects remain long enough on a fixed region of the film to expose sufficiently the chemicals while moving objects expose each part of the film only briefly thus failing to provide sufficient exposure to any specific part of the film. Similarly, in the retinotopic space, a moving object will stimulate each retinotopically localized receptive-field briefly and an incompletely processed form information would spread across the retinotopic space just like the ghost-like appearances. We call this the “problem of moving ghosts”. We hypothesize that information about the form of moving targets is conveyed to a non-retinotopic space where it can accrue over time to allow neural processing to synthesize shape information.

COPERNICAN REVOLUTIONS IN THE BRAIN: REFERENCE FRAMES AND IMPLIED PERCEPTUAL AND COGNITIVE REVOLUTIONS

If retinotopic representations are mapped to non-retinotopic representations, what are then the appropriate representations to be used in non-retinotopic spaces? Since the problem itself arises from a variety of motions, its solution can be found in reference frames built according to motion patterns.

To appreciate this, consider the problem faced by astronomers prior to the 16th century. They used the earth as the center of their system and tried to express planetary motions according to this reference frame. This resulted in an overly complex system containing epicycles. A fundamental revolution occurred when Copernicus proposed a system where the reference frame shifted from earth to the sun.

In developmental psychology, Jean Piaget used the Copernican revolution analogy to highlight the shift of children’s perceptual and cognitive structures from self-centered (egocentric) reference frames to exocentric reference frames: “the child eventually comes to regard himself as an object among others in a universe that is made up of permanent objects (that is, structured in a spatio-temporal manner) and in which there is at work a causality that is both localized in space and objectified in things”.

Our research investigates the nature of non-retinotopic reference frames and their implications on perceptual and cognitive processes. Our recent and past findings can be found on the Publications page.